Introduction to Apache Airflow

Introduction

What is Apache Airflow?

Apache Airflow is an open-source platform to Author, Schedule and Monitor workflows. It was created at Airbnb in 2015 (as a better way to quickly author, iterate on, and monitor the batch data pipelines handling the massive amount of data being processed at Airbnb).

Currently Apache Airflow is a part of Apache Software Foundation. Airflow has gained a lot of popularity thanks to its robustness and its flexibility through the use of Python

Just as JAREK POTIUK pointed out, AIRFLOW IS AN ORCHESTRATOR (which can be likened a conductor in an orchestra, it doesn’t do much but controls the flow of everything, He is there to control and synchronize every other person doing the job)

Okay, cool since apache airflow manages workflow what exactly is Workflow?

Workflow in its simple sense is a sequence of tasks started on a schedule or triggered by an event

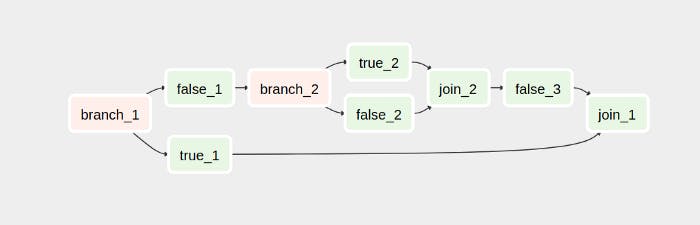

An Airflow workflow is designed as a directed acyclic graph (DAG). when authoring a workflow, it is divided into tasks which can be executed independently. You can then merge these tasks into a logical whole by combining them into a graph. Each task in a workflow is idempotent

In your wandering thoughts you might be thinking what are the benefits of Using airflow

Benefits of airflow

- It can handle upstream/downstream data gracefully

- Easy access to historical data (backfill and rerun for historical data)

- Easy error handling (retry if failure happen)

- It has a Fantastic community (You can join the airflow community on slack)

- Extendable thought the use of operators

- It has Fantastic logging capabilities

- Scalability and dependency management

- Powerful monitoring you can run and view workflow execution in real-time

- Programmatically author, schedule and monitor workflows or data pipelines

- Great Alerting System

- Access through REST API’s

- It can be used locally and in the cloud (which is someone else’s computer 😆 😜)

Major Cloud service providers for airflow are ASTRONOMER.IO and google cloud composer

Use cases of airflow

Apache airflow can be used for various purposes

- ETL (extract, transform, load) jobs

- Extracting data from multiple sources

- Machine Learning pipelines

- Data warehousing

- Orchestrating automated testing

Here are some notable mentions

Concepts in airflow

- OPERATORS

- DAG

- TASKS

- CONNECTORS

- HOOKS

- VARIABLES

- XCOM

- EXECUTORS

1) OPERATORS

An operator describes a single task in a workflow. Operators are usually (but not always) atomic, meaning they can stand on their own and don’t need to share resources with any other operators.

An operator is simply a Python class with an “execute()” method, which gets called when it is being run.

Types of Operators in Airflow

i. Action ii. Transfer iii. Sensor

2. DAG

DAGs describe how to run a workflow.

DAG is simply a Python script that contains a set of tasks and their dependencies. What each task does is determined by the task’s operator

Simply put a DAG is a collection of tasks with defined dependencies and relationships

A DagRun is an instance of a DAG, it is created by scheduler or ut can be triggered manually

3.TASKS

Tasks are defined in DAGs, and both are written in Python code to define what you want to do.

What each task does is determined by the task’s operator

4.CONNECTORS

The information needed to connect to external systems is stored in the Airflow stores all connectors in its metastore database,

⭐️ The variable section can also hold these “so-called connection data and parameters” but according to best practices they should be stored as connectors. But guess what? if you are one of those developers that dont love to read documentations you can store them wherever you like

5.HOOKS

Hooks are used to interact with external systems, like S3, HIVE, SFTP, databases etc.

All communication with external services are done using hooks

operator <->hooks <-> externalAPI</span>

6. VARIABLES

Variables are a generic way to store and retrieve arbitrary content or settings as a simple key-value pair

This can be likened to environment Variables

7. XCOM

Even though operators are idempotent, they still need to share information or a small amount of data (operator cross-communication ) and this is where XCOM comes into the picture

XComs let tasks exchange messages, The name is an abbreviation of “cross-communication”.

8. EXECUTORS

Executors are the mechanism by which task instances get to run. Airflow has support for various executors.

TYPES OF EXECUTORS 1. DebugExecutors (runs tasks in a single process, Simple but not good for production) 2. LocalExecutors (runs tasks in a separate process) 3. CeleryExecutor (Sends tasks to celery worker hard to set up but best for production) 4. KubernetesExecutor (Spawns new pod with airflow and runs task BEST SUITED FOR HEAVY TASKS)

Executors are very important and at first, the concept might appear complex but there’s a thorough explanation provided by Astronomer.io

CONCLUSION

One of the biggest benefits of airflow is that it allows you to programmatically define workflows. It gives users the freedom to write what code to execute at every step of the pipeline.

Airflow also has a very powerful and well-equipped UI. That makes tracking jobs, backfilling jobs, and configuring the platform an easy task

READY TO GET STARTED WITH APACHE AIRFLOW?

Here’s a quick rundown on how to spin up apache airflow on your local machine

💡 Airflow is Python-based. The best way to install it is via the tool.

# airflow needs a home, ~/airflow is the default,

# but you can lay foundation somewhere else if you prefer

# (optional)

export AIRFLOW_HOME=~/airflow</span>

Install from PyPI using pip

pip install apache-airflow</span>

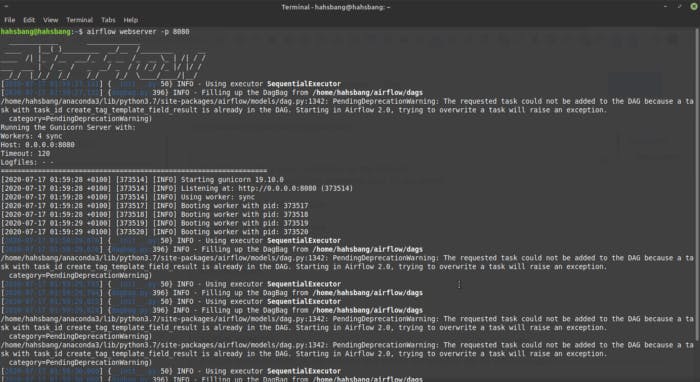

start the web server

airflow webserver -p 8080</span>

Apache Airflow Webserver Log

Apache Airflow Webserver Log

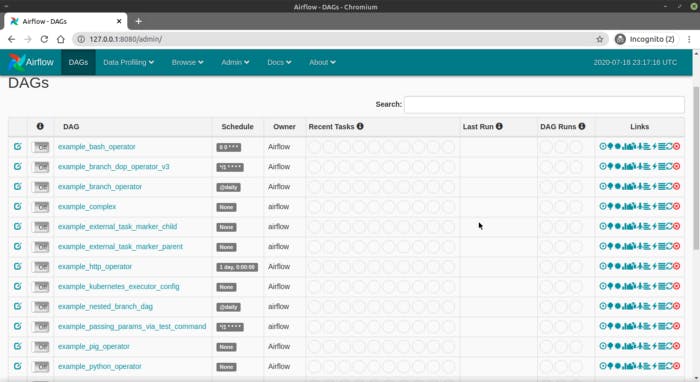

Now when you visit 0.0.0.0:8080 🚀

Apache Airflow UI

Apache Airflow UI

Astronomer CLI is also a great tool (actually the easiest way to run Apache Airflow)

Watch out for more tutorials where I’ll be using the Astronomer CLI to create magic

Take care, stay safe 🌊 and MAY THE FLOW BE WITH YOU

✌️ ✌️ 🚀 🚀 ✌️ ✌️

Further Reading